For years, programmatic SEO has lived in an awkward space.

When it works, it looks like magic.

When it fails, it gets dismissed as “thin AI spam.”

Grokipedia is one of the clearest real-world examples that AI-driven pSEO can work at extreme scale, not because it gamed Google, but because it executed the fundamentals better than almost anyone else.

And yes, it had unfair advantages.

But that doesn’t invalidate the lesson. It clarifies it.

Let’s break this down properly, using actual data, structure, and first-principles thinking.

The Data: What Grokipedia Achieved in a Very Short Time

Based on live third-party SEO tooling and AI citation tracking, Grokipedia currently shows:

Search & Visibility Metrics

- ~827,000 organic keywords indexed

- ~616,000 monthly organic visits

- Top 3 rankings for ~11,000 keywords

- $30k+ estimated traffic value

- Zero paid traffic

This alone already puts Grokipedia ahead of 99.9% of AI-generated content projects that never escape the “indexed but invisible” stage.

Authority & Link Signals

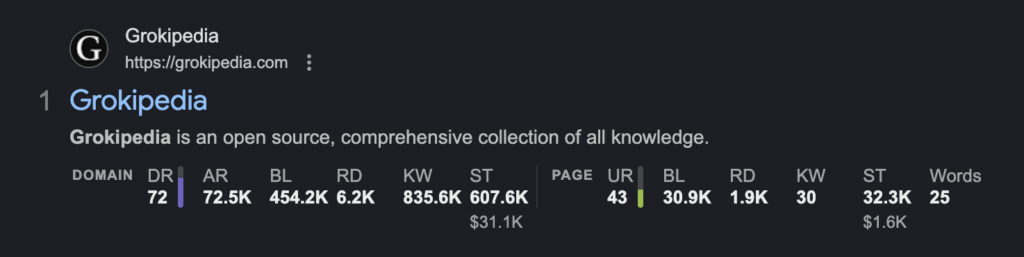

- Domain Rating (DR): 72

- URL Rating (UR): 43

- ~476,000 backlinks

- ~6,200 referring domains

Those are not “new site” numbers. Those are numbers most editorial brands take a decade to build.

AI Search & Answer Engine Citations

Grokipedia is also heavily cited across AI systems:

- Google AI Overviews: 600+ citations

- ChatGPT: ~211,000 page-level references

- Gemini: ~24,600 citations

- Perplexity: 50+ citations

- Copilot: ~3,400 citations

This is critical.

Grokipedia is not just ranking in classic search. It is being used as a reference layer by LLMs, which is exactly where search behavior is moving.

Why Most AI pSEO Projects Fail (And Why Grokipedia Didn’t)

The common narrative is that “AI content doesn’t work.”

That is wrong.

Thin systems don’t work.

Most failed pSEO attempts share the same mistakes:

- Single-page templates stretched across thousands of URLs

- Keyword-first logic instead of concept-first coverage

- No internal semantic reinforcement

- No real user engagement signals

- No trust, no authority, no reason for Google or LLMs to care

Grokipedia avoided every one of these traps.

Concept Coverage, Not Keyword Coverage

Grokipedia did not target keywords.

It targeted entire concepts.

Instead of building:

“What is X?”

“X definition”

“X explained”

It built dense, interlinked topic graphs, where:

- Every concept has context

- Every definition links outward

- Every page reinforces adjacent knowledge

- No page exists in isolation

This is exactly how LLMs reason, and increasingly how Google evaluates topical authority.

In short:

Grokipedia looks less like an SEO site and more like a structured knowledge base.

That matters.

Semantic Interconnection at Scale

This is the real pSEO lesson most people miss.

Grokipedia works because:

- Thousands of pages are semantically connected

- Internal linking is meaningful, not decorative

- Entity relationships are explicit, not implied

- Pages support each other instead of competing

Each page:

- Strengthens the topical cluster

- Reinforces crawl depth

- Improves discovery and indexing

- Signals completeness to ranking systems

This is why Grokipedia has more indexed keywords than traffic, yet still wins. It is building long-term authority, not just chasing clicks.

The Unfair Advantage: Instant Credibility

Now let’s address the elephant in the room.

Grokipedia had:

- Massive PR exposure

- Immediate backlink velocity

- Real user engagement from day one

- Widespread discussion and sharing

And yes, that matters. A lot.

The association with Elon Musk and the broader xAI ecosystem meant Grokipedia skipped years of the typical “prove yourself” phase.

Google did not have to guess if it was valuable.

The internet told Google it was.

That does not make the model invalid.

It makes the signal amplification faster.

Why Smaller pSEO Sites Fail When They Copy This

Many teams looked at Grokipedia and said:

“Let’s do the same thing with AI.”

Then they:

- Generated shallow pages

- Skipped editorial depth

- Ignored semantic architecture

- Launched without distribution or authority

- Expected rankings to appear magically

That is not how this works.

You cannot fake:

- Depth

- Interconnectedness

- Real engagement

- Trust at scale

AI accelerates execution. It does not replace strategy.

The Real Lesson: Systems Beat Content

Grokipedia proves that:

- AI content can rank

- AI content can scale

- AI content can dominate AI search

But only when:

- The system is designed for knowledge, not keywords

- Pages reinforce each other

- Authority is built intentionally

- Distribution is part of the launch, not an afterthought

This is not “AI SEO.”

This is knowledge engineering applied to search.

Where This Is Going Next

As LLM-driven discovery becomes normal:

- Citation density matters more than blue links

- Entity coverage matters more than keyword density

- Structured knowledge beats isolated articles

- Brands that look like reference sources win

Grokipedia is not an anomaly.

It is an early example of the direction search is moving.

Final Thought

Programmatic SEO did not fail because of AI.

It failed because people built thin systems and expected fat returns.

Grokipedia shows what happens when:

- Depth is real

- Structure is intentional

- Scale is paired with authority

- AI is used as an amplifier, not a shortcut

And the best part?

With modern AI tooling, building this level of depth is now possible without a Wikipedia-sized editorial team.

The bar has moved.

But it is not unreachable.

If you’re exploring how to build real AI visibility, programmatic SEO systems, or knowledge-led content at scale, this is exactly the kind of work we’re doing at Saigon Digital. You can see how we approach AI search, SEO, and scalable content systems here.

Leave a Reply